OpenAI: Exodus

🌈 Abstract

The article discusses recent high-profile departures from OpenAI, particularly of key safety researchers like Ilya Sutskever and Jan Leike. It analyzes the implications of these departures, the potential reasons behind them, and the concerning revelations about OpenAI's practices around non-disparagement agreements and the treatment of departing employees.

🙋 Q&A

[01] Departures of Sutskever and Leike

1. What were the key points made about the departures of Ilya Sutskever and Jan Leike from OpenAI?

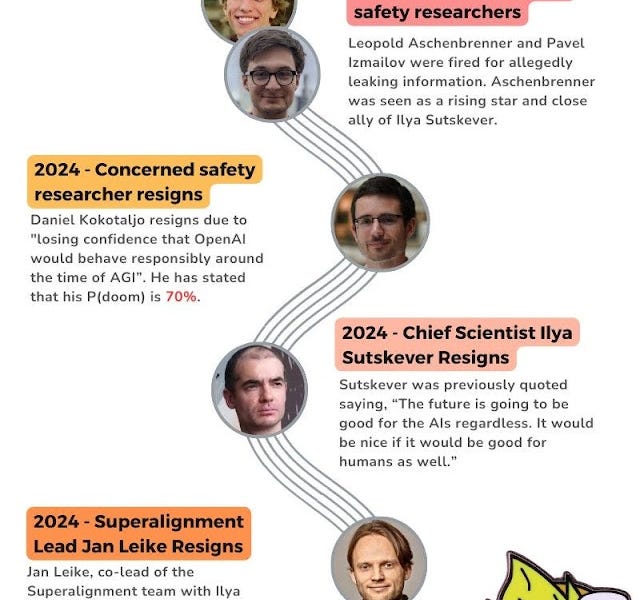

- Ilya Sutskever and Jan Leike, two prominent safety researchers, have left OpenAI.

- Their departures are part of a broader pattern of safety researchers leaving OpenAI in recent months.

- Jan Leike offered an explanation, stating that OpenAI has lost its focus on safety and has been increasingly hostile to it. He said the Superalignment team was starved of resources and that safety has been neglected across the board.

- The departures of Sutskever and Leike, along with many other safety researchers, are seen as highly concerning by many in the AI safety community.

2. How did OpenAI's leadership respond to the departures?

- Sam Altman acknowledged there was much work to do on the safety front and offered a response that was seen as not providing any new information.

- Greg Brockman expressed gratitude for Sutskever's contributions and said Jakub Pachocki would be the new Chief Scientist.

3. What was the reaction from the broader community to these departures?

- Many saw the departures as a "canary in the coal mine" and an indication that OpenAI is no longer prioritizing safety.

- Some were more optimistic, suggesting the departures could lead to a reallocation of resources to other safety efforts.

- Others were critical, arguing that OpenAI is prioritizing capabilities over safety.

[02] Non-Disparagement Agreements and NDAs

1. What did the article reveal about OpenAI's use of non-disparagement agreements and NDAs?

- OpenAI has been systematically forcing departing employees to sign draconian lifetime non-disparagement agreements, which prohibit them from revealing or criticizing the company.

- Employees are threatened with the loss of their vested equity if they refuse to sign these agreements.

- This practice appears to be highly unusual and concerning, as it effectively silences former employees and prevents them from speaking out about the company's practices.

2. How did OpenAI respond to these revelations?

- Sam Altman acknowledged that the inclusion of provisions about potential equity cancellation in the exit documents was a mistake and should never have been there.

- Altman promised to fix the issue and said that vested equity would not be taken away if employees refused to sign the non-disparagement agreements.

- However, many were skeptical of Altman's response, noting that the threat had already been effective in silencing former employees.

3. What are the broader implications of OpenAI's use of these non-disparagement agreements and NDAs?

- The practice raises concerns about OpenAI's commitment to transparency and accountability, as it appears to be actively suppressing criticism and feedback from former employees.

- It suggests a culture of secrecy and a focus on reputation management rather than addressing legitimate concerns about the company's safety practices.

- The revelations have damaged trust in OpenAI's leadership and their ability to responsibly handle the development of advanced AI systems.

[03] Reactions and Perspectives

1. What were some of the different perspectives and reactions expressed in the article?

- Some saw the departures as a positive, arguing that OpenAI's approach to safety was flawed and that the resources could be better allocated elsewhere.

- Others were more concerned, viewing the departures as a sign that OpenAI is no longer prioritizing safety and is instead focused on capabilities and product development.

- There were also calls for increased transparency, accountability, and whistleblower protections in the AI industry.

2. How did the article suggest the community should respond to these events?

- The article emphasized the need for the community to carefully evaluate statements from current and former OpenAI employees, given the potential for non-disparagement agreements and NDAs to silence criticism.

- It encouraged people to share information and insights, while respecting the constraints that former employees may be under.

- The article also suggested that the community should closely monitor how OpenAI handles the non-disparagement agreements and NDAs going forward, as this will be a key test of their commitment to transparency and responsible AI development.